You might need seen headlines this week concerning the Samsung Galaxy S23 Extremely taking so-called “pretend” moon footage. Ever because the S20 Extremely, Samsung has had a function known as House Zoom that marries its 10X optical zoom with huge digital zoom to succeed in a mixed 100X zoom. In advertising photographs, Samsung has proven its cellphone taking near-crystal clear footage of the moon, and customers have achieved the identical on a transparent evening.

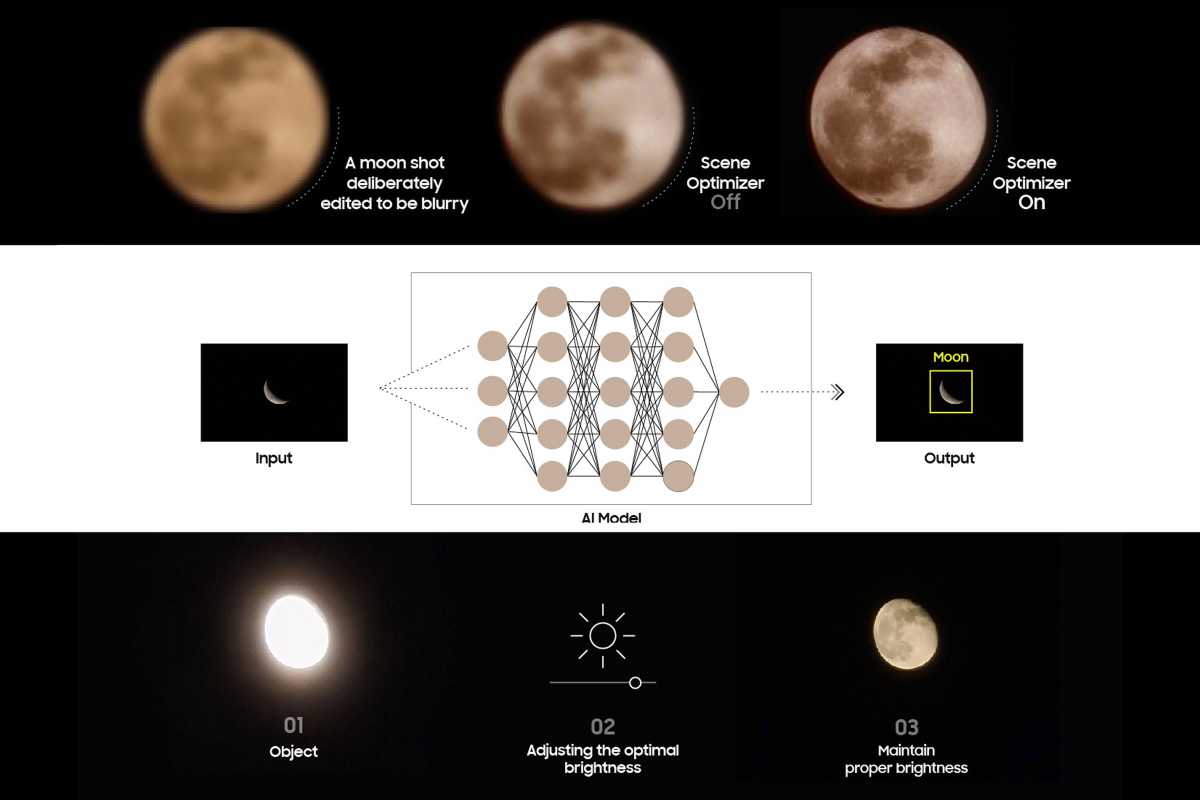

However a Redditor has confirmed that Samsung’s unimaginable House Zoom is utilizing a little bit of trickery. It seems that when taking footage of the moon, Samsung’s AI-based Scene Optimizer does an entire lot of heavy lifting to make it appear like the moon was photographed with a high-resolution telescope quite than a smartphone. So when somebody takes a shot of the moon—within the sky or on a pc display as within the Reddit submit—Samsung’s computational engine takes over and clears up the craters and contours that the digital camera had missed.

In a follow-up submit, they show past a lot doubt that Samsung is certainly including “moon” imagery to pictures to make the shot clearer. As they clarify, “The pc imaginative and prescient module/AI acknowledges the moon, you are taking the image, and at this level, a neural community skilled on numerous moon photos fills within the particulars that weren’t accessible optically.” That’s a bit extra “pretend” than Samsung lets on, but it surely’s nonetheless very a lot to be anticipated.

Even with out the investigative work, It must be pretty apparent that the S23 can’t naturally take clear photographs of the moon. Whereas Samsung says House Zoomed photographs utilizing the S23 Extremely are “able to capturing photos from an unimaginable 330 toes away,” the moon is sort of 234,000 miles away or roughly 1,261,392,000 toes away. It’s additionally 1 / 4 the dimensions of the earth. Smartphones haven’t any drawback taking clear pictures of skyscrapers which are greater than 330 toes away, in spite of everything.

After all, the moon’s distance doesn’t inform the entire story. The moon is actually a light-weight supply set in opposition to a darkish background, so the digital camera wants a little bit of assist to seize a transparent picture. Right here’s how Samsung explains it: “Once you’re taking a photograph of the moon, your Galaxy system’s digital camera system will harness this deep learning-based AI expertise, in addition to multi-frame processing with a view to additional improve particulars. Learn on to study extra concerning the a number of steps, processes, and applied sciences that go into delivering high-quality photos of the moon.”

It’s not all that completely different from options like Portrait Mode, Portrait Lighting, Evening Mode, Magic Eraser, or Face Unblur. It’s all utilizing computational consciousness so as to add, regulate, and edit issues that aren’t there. Within the case of the moon, it’s straightforward for Samsung’s AI to make it appear to be the cellphone is taking unimaginable footage as a result of Samsung’s AI is aware of what the moon seems to be like. It’s the identical purpose why the sky generally seems to be too blue or the grass too inexperienced. The photograph engine is making use of what it is aware of to what it sees to imitate a higher-end digital camera and compensate for regular smartphone shortcomings.

The distinction right here is that, whereas it’s widespread for photo-taking algorithms to phase a picture into elements and apply completely different changes and publicity controls to them, Samsung can be utilizing a restricted type of AI picture era on the moon mix in particulars that had been by no means within the digital camera knowledge to start with–however you wouldn’t comprehend it, as a result of the moon’s particulars all the time look the identical when seen from Earth.

Samsung says the S23 Extremely’s digital camera makes use of Scene Optimizer’s “deep-learning-based AI element enhancement engine to successfully remove remaining noise and improve the picture particulars even additional.”

Samsung

What’s going to Apple do?

Apple is closely rumored so as to add a periscope zoom lens to the iPhone 15 Extremely for the primary time this 12 months, and this controversy will little doubt weigh into the way it trains its AI. However you may be assured that the computational engine will do a good quantity of heavy lifting behind the scenes because it does now.

That’s what makes smartphone cameras so nice. In contrast to point-and-shoot cameras, our smartphones have highly effective brains that may assist us take higher pictures and assist dangerous pictures look higher. It may possibly make nighttime pictures appear to be they had been taken with good lighting and simulate the bokeh impact of a digital camera with an ultra-fast aperture.

And it’s what’s going to let Apple get unimaginable outcomes from 20X or 30X zoom from a 6X optical digital camera. Since Apple has to this point steered away from astrophotography, I doubt it can go so far as sampling higher-resolution moon pictures to assist the iPhone 15 take clearer photographs, however you’ll be able to ensure that its Photonic Engine might be onerous at work cleansing up edges, preserving particulars, and boosting the capabilities of the telephoto digital camera. And primarily based on what we get within the iPhone 14 Professional, the outcomes will certainly be spectacular.

Whether or not it’s Samsung or Apple, computational images has enabled a number of the largest breakthroughs over the previous a number of years and we’ve solely simply scratched the floor of what it could do. None of it’s truly actual. And if it was, we’d all be loads much less impressed with the pictures we take with our smartphones.