For the reason that launch of ChatGPT, there was a preponderance of AI Chatbots like ChatGPT. Nonetheless, the unfold of those chatbots additionally signifies that points are brewing. In fact, OpenAI shocked the world with the flexibility of ChatGPT. Because of this, there have been many objections to its use and a few even contain authorized battles. Nonetheless, within the U.S., the house nation of ChatGPT, the regulation may shield ChatGPT. Article 230 of the US Communications Decency Act issued in 1996 says that an organization doesn’t must bear obligation for the content material printed by third events or customers on its platform (Article 230).

Nonetheless, the U.S. Supreme Courtroom will resolve within the subsequent few months whether or not to weaken this safety. This may occasionally additionally have an effect on AI chatbots akin to ChatGPT. Judges are anticipated to rule by the top of June on whether or not Alphabet-owned YouTube might be sued for recommending movies to customers. Whereas platforms are exempt from legal responsibility for user-uploaded movies, do Part 230 protections nonetheless apply once they use algorithms to suggest content material to customers?

Expertise and authorized consultants say the case has implications past social media platforms. They imagine that the decision may spark new debate over whether or not firms akin to OpenAI and Google, which develop AI chatbots, might be immune from authorized claims akin to defamation or invasion of privateness. Consultants level out that it’s because the algorithm utilized by ChatGPT and others are just like the best way it recommends movies to YouTube customers.

What do AI chatbots do?

AI chatbots are educated to generate huge quantities of unique content material, and Part 230 usually applies to third-party content material. Courts have but to contemplate whether or not AI chatbot responses could be protected. A Democratic senator mentioned the immunity entitlement can’t apply to generative AI instruments as a result of these instruments “create content material.” “Part 230 is about defending customers and web sites internet hosting and organizing consumer speech. It mustn’t insulate firms from the implications of their very own actions and merchandise,” he mentioned.

The tech business has been pushing to maintain Part 230. Some folks assume that instruments like ChatGPT are like serps, serving present content material to customers primarily based on queries. “AI doesn’t actually create something. It simply presents present content material differently or in a special format,” mentioned Carl Szabo, vp and normal counsel at tech business commerce group, NetChoice.

Szabo mentioned that if Part 230 have been weakened, it could create an not possible process for AI builders. This will even expose them to a flood of lawsuits that would stifle innovation. Some consultants speculate that courts could take a impartial stance and look at the context wherein AI fashions generate probably dangerous responses.

Gizchina Information of the week

Exemption advantages should still apply in circumstances the place the AI mannequin seems to have the ability to clarify present sources. However chatbots like ChatGPT have been recognized to generate false solutions, which consultants say might not be protected.

Hanny Farid, a technologist and professor on the Univ. of California, Berkeley, mentioned it’s farfetched to assume that AI builders needs to be immune from lawsuits over the fashions they “program, practice and deploy.”

“If firms might be held liable in civil fits, they make safer merchandise; if they’re exempt, the merchandise are usually much less protected,” Farid mentioned.

Points with AI instruments like ChatGPT

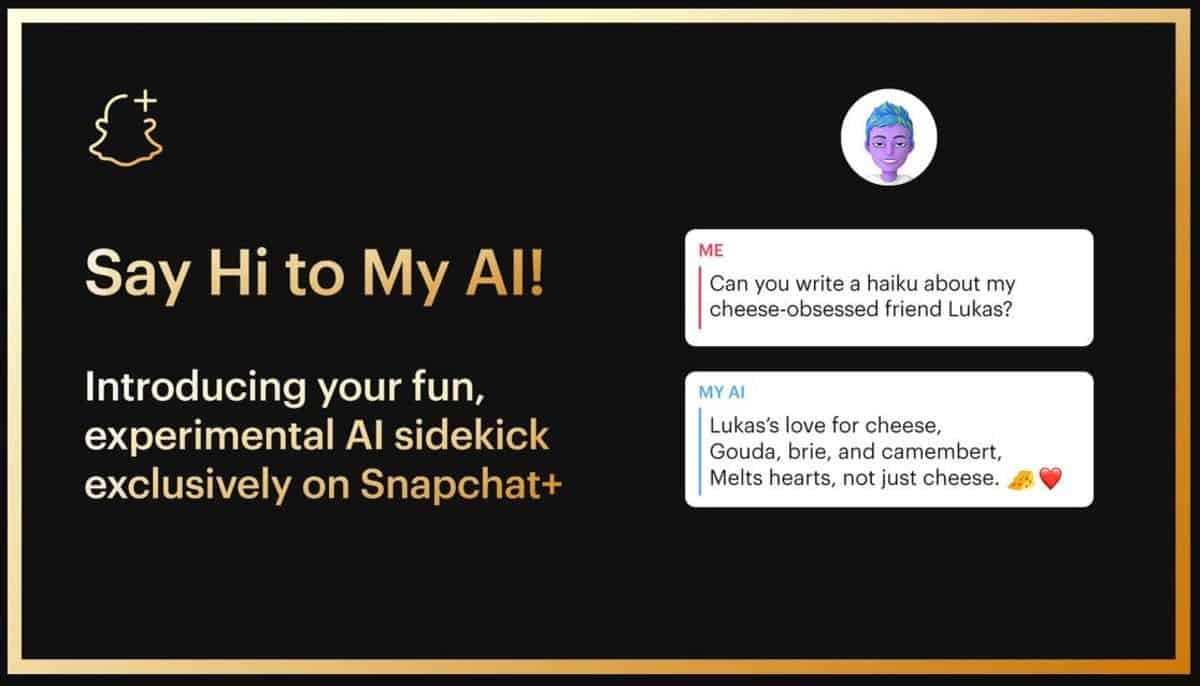

AI chatbots are actually very fashionable in at this time’s world. This is because of the truth that they supply an environment friendly and handy approach for folks to work together. These chatbots are able to processing pure language and offering related responses to queries. This makes them a great tool for customer support, gross sales, and help. Nonetheless, considerations have been raised in regards to the security of utilizing AI chatbots, principally when it comes to privateness and safety.

One of many essential considerations with AI chatbots may very well be a lacuna for information breaches and cyber assaults. As chatbots usually require customers to supply private data akin to their title, handle, and fee particulars, there’s a danger that this data may very well be stolen. To cut back this danger, firms should be certain that their chatbots are safe and use encryption strategies to guard consumer information.

One other difficulty with AI chatbots is the potential for the system to cave to bias. As these chatbots are programmed by people, they could be biases and stereotypes that exist in society. For instance, a chatbot designed to display job seekers could discriminate towards sure teams primarily based on their race, gender, or ethnicity. To keep away from this, chatbot builders should be certain that their algorithms are close to good and with out bias.

Additionally, there’s a danger that AI chatbots could not have the ability to deal with complicated queries. This consists of those who hyperlink to psychological well being or home abuse. In such circumstances, customers could not obtain the help they want. After all, this is among the areas the place folks need these chatbots to be liable. If they provide a flawed response in sure situations, the result may very well be very dangerous.

Ultimate Phrases

Regardless of these considerations, AI chatbots might be protected to make use of if put to correct use. Some folks need to use AI chatbots to switch medical doctors and different consultants. These should not excellent makes use of of this software. On the a part of the builders, they have to keep away from bias in any respect prices and be certain that the system is inclusive. Additionally, chatbots shouldn’t be left to work with out human presence. Every so often, they need to be checked and upgraded if want be. To make sure that they’re protected to make use of, builders should handle considerations round safety, privateness, bias, and the boundaries of chatbots. With the suitable instruments in place, AI chatbots could make the seek for data very simply. It is also a fairly first rate assistant to many individuals that want some kind of assist with data.