AI voice scams have gotten extra prevalent and may be extraordinarily convincing as a result of it sounds such as you’re speaking to a beloved one. Now we’ve received an in-depth report that digs into how AI voice cloning works, how frequent the scams are, the chance of falling for one, the typical value, plus how you can forestall and defend in opposition to AI voice scams.

In April, we noticed some real-world examples of next-gen AI scams which can be fairly scary. Certainly one of them used name spoofing so a beloved one confirmed up on the sufferer’s cellphone because the individual calling. One other one used an AI voice clone to attempt to extort ransom cash from a mom to launch her daughter – that wasn’t kidnapped.

As I famous within the piece above, it’s seemingly only a matter of time earlier than attackers mix each name spoofing with AI voice clones.

Now McAfee has launched an in-depth report on AI voice scams to assist construct consciousness of the menace and some straightforward methods to forestall and defend in opposition to it.

How does AI voice-cloning work?

McAfee highlights AI voice scams are a remix of “imposter scams” which were round for a very long time, however they are often rather more convincing. Usually it entails the scammer utilizing a beloved one’s voice and asking for cash for an emergency or in some circumstances pretending to carry a beloved one for ransom.

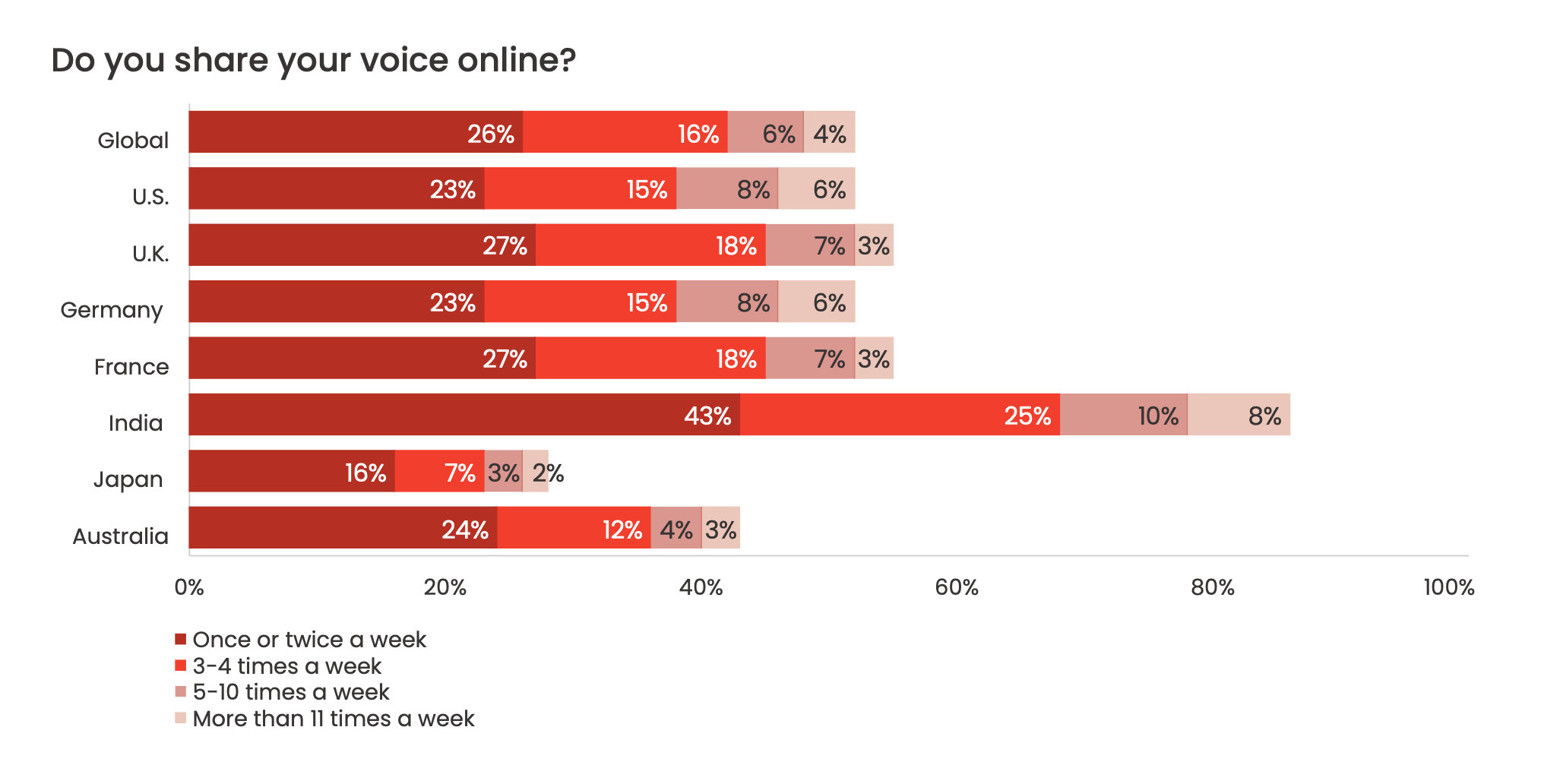

As a result of AI voice clone instruments are so low cost and accessible, it’s quick and simple for malicious events to create voice clones. And the way in which they get pattern audio to do this is from folks sharing their voices on social media. And the extra you share your voice on-line, the simpler it’s for menace actors to search out and clone your voice.

How frequent are AI voice scams?

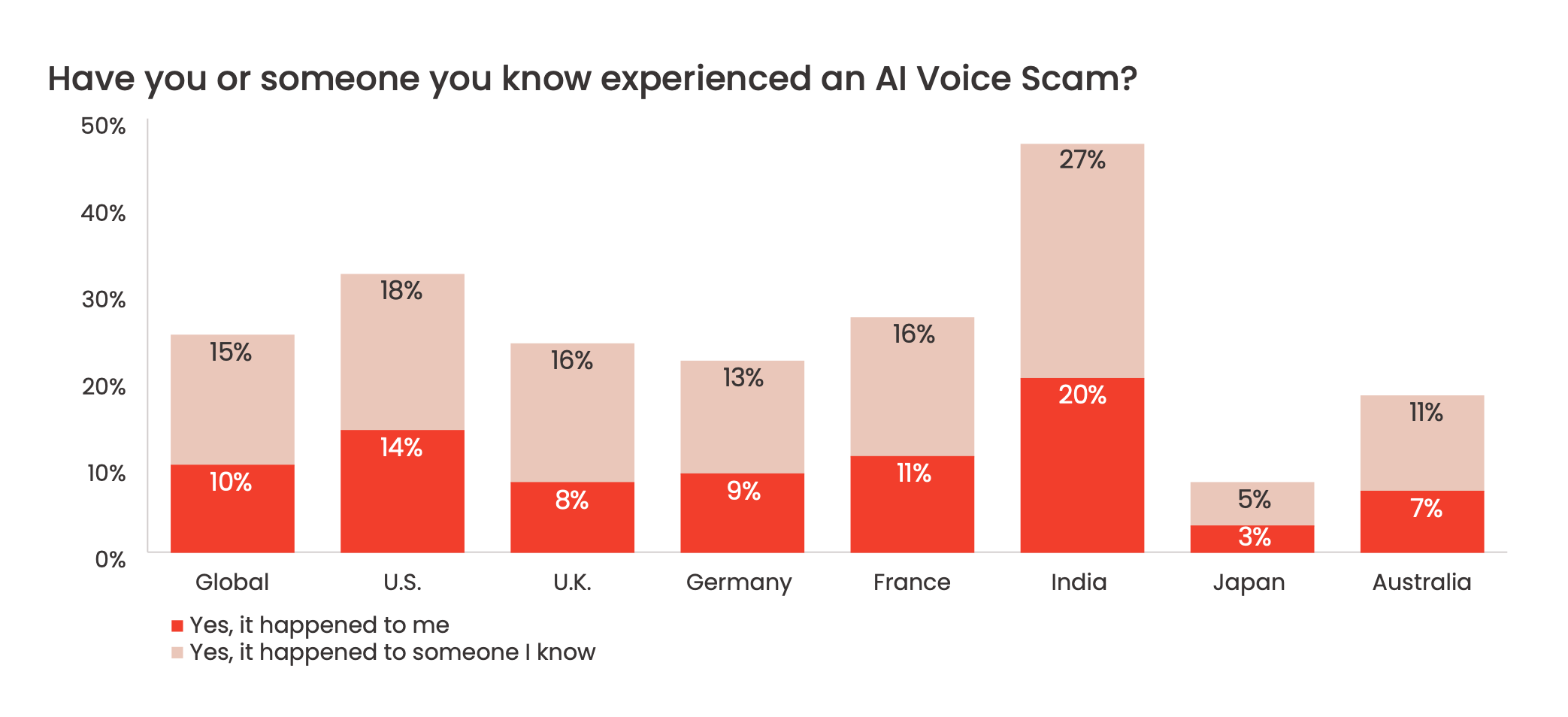

Whereas we’ve simply began to see some real-world tales within the information about AI voice scams, McAfee’s research discovered they’re turning into fairly frequent.

The worldwide common confirmed 25% of individuals surveyed both skilled an AI rip-off or somebody they know did.

That was larger within the US at 32% with India seeing essentially the most bother with AI voice scams at 47% of respondents saying they or somebody they know has been affected.

How correct is it?

McAfee’s analysis discovered that voice-cloning instruments ship as much as 95% accuracy.

Within the publicly reported circumstances of AI voice-cloning scams, victims have recounted how the voice sounded “identical to” the individual being cloned. In a single significantly egregious case, the place a cybercriminal demanded a ransom for a pretend kidnapping, the mom stated it was “utterly her voice” and that “it was her inflection.” It’s now tougher than ever to inform actual from pretend, so folks might want to assume they will’t all the time consider what they see and listen to.

How typically and the way a lot do victims lose?

- Sadly, McAfee’s analysis reveals that 77% of AI voice rip-off victims lose cash

- A couple of-third misplaced over $1,000

- 7% had been duped out of between $5,000 and $15,000

- Within the US, that quantity is highest with 11% dropping between $5,000–$15,000

As an entire, imposter scams are believed to have stolen $2.6 billion in 2022.

Methods to forestall and defend in opposition to AI scams

As I beforehand wrote, and McAfee additionally shares, three main methods to forestall and defend in opposition to AI voice scams are:

- Restrict how a lot you share your voice and/or video on-line and/or set your social media accounts to non-public as an alternative of public

- Ask a problem query and even two if you happen to get a suspicious name – one thing solely your beloved would be capable to reply (e.g. title your childhood stuffed animal, and so forth.)

- Keep in mind, don’t ask a query to which the reply may very well be discovered on social media, on-line, and so forth.

- Let unknown numbers go to voicemail and name or textual content the individual straight out of your cellphone if you happen to’re involved about them

Listed here are McAfee’s full suggestions:

- Suppose earlier than you click on and share—who’s in your social media community? Do you actually know and belief your connections? Be considerate about what you’re sharing on Fb, YouTube, Instagram, and TikTok. Think about limiting your posts to simply family and friends via the privateness settings. The broader your connections, the extra danger chances are you’ll be opening your self as much as when sharing content material about your self.

- Id monitoring providers may also help to provide you with a warning in case your personally identifiable data is accessible on the Darkish Internet. Id theft is usually the place AI voice and different focused scams begin. Take management of your private knowledge to keep away from a cybercriminal having the ability to pose as you. Id monitoring providers present a layer of safety that may safeguard your identification.

- And 4 methods to keep away from falling for the AI voice rip-off straight, embody:

- 1. Set a ‘codeword’ with children, relations, or trusted shut buddies that solely they might know. Make a plan to all the time ask for it in the event that they name, textual content, or e mail to ask for assist, significantly in the event that they’re older or extra weak.

- 2. At all times query the supply—If it’s a name, textual content, or e mail from an unknown sender, or even when it’s from a quantity you acknowledge, cease, pause, and assume. Asking directed questions can throw off a scammer. For example, “Are you able to affirm my son’s title?” or, “When is your father’s birthday?” Not solely can this take the scammer without warning, however they might additionally have to regenerate a brand new response, which may add unnatural pauses into the dialog and create suspicion.

- 3. Don’t let your feelings take over. Cybercriminals are counting in your emotional connection to the individual they’re impersonating to spur you into motion. Take a step again earlier than responding. Does that basically sound like them? Is that this one thing they’d ask of you? Cling up and name the individual straight or attempt to confirm the knowledge earlier than responding.

- 4. Think about whether or not to reply sudden calls from unknown cellphone numbers. It’s typically good recommendation to not reply calls from strangers. In the event that they depart a voicemail, this provides you time to replicate and get in touch with family members independently to verify their security.

For extra particulars, take a look at the total report. You may also learn extra about AI voice scams on the FTC’s web site.

High picture by way of McAfee

FTC: We use earnings incomes auto affiliate hyperlinks. Extra.