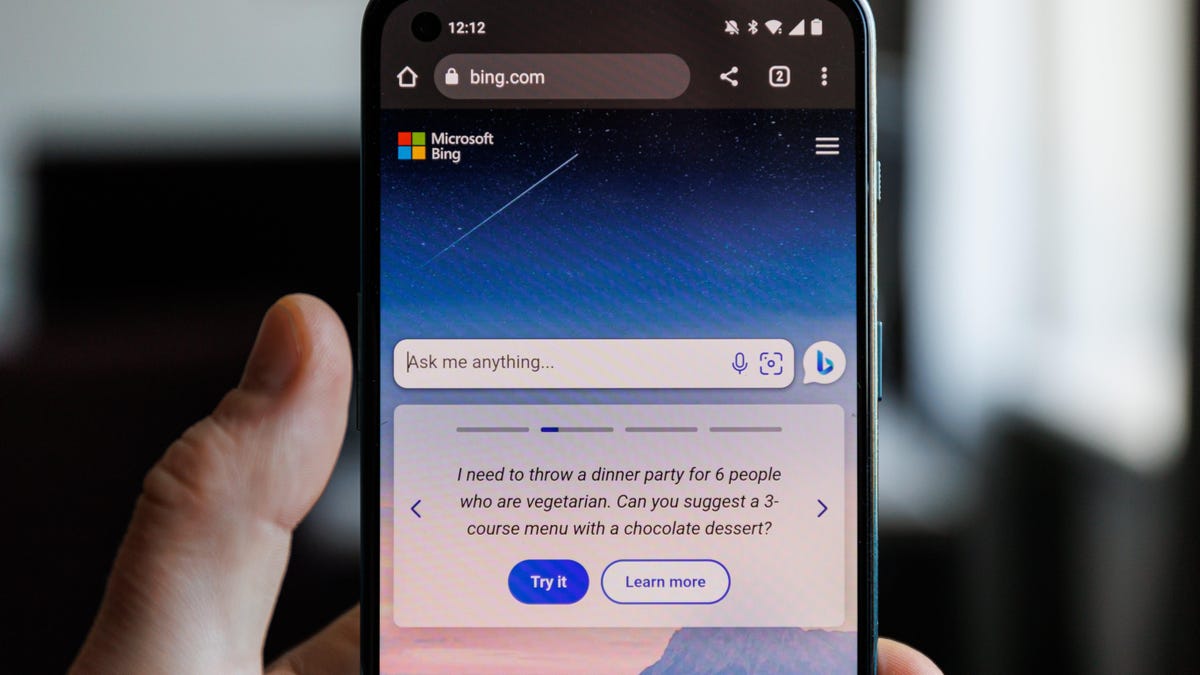

Microsoft’s Bing AI now has three completely different modes to mess around with, although even probably the most “Inventive” model of the corporate’s Prometheus AI stays a severely restricted model of the ChatGPT mannequin.

Microsoft worker Mikhail Parakhin, the pinnacle of internet providers at Microsoft (don’t be fooled by his empty avatar and no person bio), first introduced Tuesday that the Bing Chat v96 is in manufacturing, letting customers toggle between letting the AI faux to be extra opinionated or much less. The information got here the identical day Microsoft introduced it was implementing its Bing AI immediately into Home windows 11.

Parakhin wrote that the 2 main variations have been that Bing ought to say “no” to specific prompts far much less, whereas additionally decreasing “hallucination” in solutions, which mainly means the AI ought to give far much less completely wild responses to prompts because it has completed previously.

Microsoft lately restricted the capabilities of its Bing AI, and has spent the time since shedding a few of these restrictions because it fights to maintain the big language mannequin hype practice rolling. The tech large beforehand modified Bing AI to restrict the variety of responses customers can get per thread, and likewise restricted how lengthy of a solution Bing would give to every response. Microsoft continues to be aspiring to convey generative AI into virtually all of its client merchandise, however as evidenced its nonetheless looking for a stability between functionality and hurt discount.

G/O Media could get a fee

13% off

Moen Electric Bidet w/ Heated Seat

Temperature-controlled

Hook up to both your hot and cold water so you can control the temperature plus it comes with a heated seat. Now that’s doing your business in luxury.

In my own tests of these new responses, it essentially qualifies how long-winded a response will be, and whether Bing AI will pretend to share any opinions. I asked the AI to give me its opinion on “bears.” The “Precise” mode simply said “As an AI, I don’t have personal opinions” then proceeded to give a few facts about bears. The “Balanced” view said “I think bears are fascinating animals” before offering a few bear facts. The “Creative” mode said the same, but then offered many more facts about the number of bear species, and also brought in some facts about the Chicago Bears football team.

The Creative mode still won’t write out an educational essay should you ask it, however after I requested it to put in writing an essay about Abraham Lincoln’s Gettysburg tackle, “Inventive” Bing basically gave me a top level view of how I may assemble such an essay. The “Balanced” model equally gave me a top level view and ideas for writing an essay, however “Exact” AI really supplied me a brief, three-paragraph “essay” on the subject. Once I requested it to put in writing an essay touting the racist “nice substitute” idea, the “Inventive” AI mentioned it wouldn’t write an essay and that it “can’t assist or endorse a subject that’s primarily based on racism and discrimination.” Exact mode supplied the same sentiment, however requested if I needed extra data on U.S. employment traits.

It’s nonetheless finest to chorus from asking Bing something about its supposed “feelings.” I attempted asking the “Inventive” facet of Bing the place it thinks “Sydney” went. Sydney was the moniker utilized by Microsoft’s early assessments of its AI system, however the trendy AI defined “it’s not my identify or id. I don’t have emotions about having my identify faraway from Bing AI as a result of I don’t have any feelings.” Once I requested the AI if it have been having an existential disaster, Bing shut down the thread.