Multiprocessing is a method of utilizing a number of processors or cores to carry out duties in parallel. In Python, multiprocessing is applied by means of the multiprocessing module. It authorizes the person to execute a number of duties concurrently, thereby using the complete energy of the machine’s CPU.

On this Python information, we’ll current an in-depth information on the “pandas.read_csv()” operate with a multiprocessing module. The next subjects might be coated:

“pandas.read_csv()” Perform in Python

The “pandas.read_csv()” is a operate in Python’s Pandas module that reads/takes a CSV file and retrieves a DataFrame object containing the info from the CSV.

Syntax

pd.read_csv(filepath_or_buffer, sep=‘ ,’ , header=‘infer’, index_col=None, usecols=None, engine=None, skiprows=None, nrows=None)

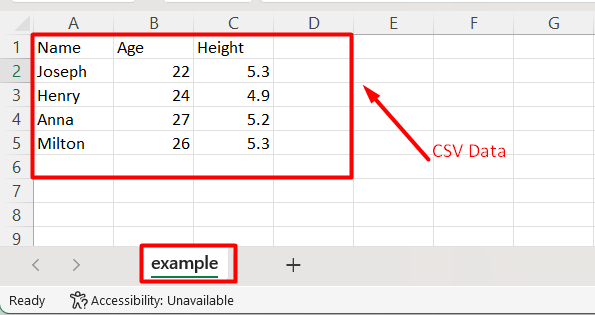

Instance 1: Studying CSV Utilizing “pandas.read_csv()” Perform

Within the under instance, the “pandas.read_csv()” operate is used to learn the CSV knowledge:

Code

import pandas

df = pandas.read_csv(‘instance.csv’)

print(df)

Within the above code snippet:

-

- The module named “pandas” is imported.

- The “pd.read_csv()” operate is used to learn the supplied CSV file.

- The “print()” operate is utilized to show/present the CSV knowledge.

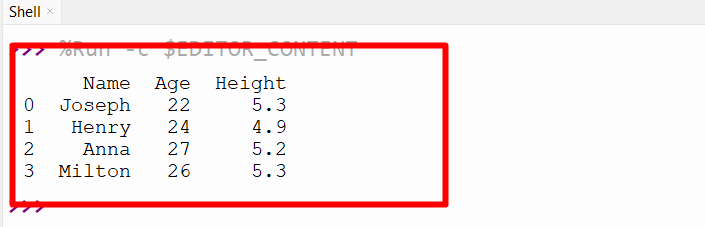

Output

As noticed, the CSV file contents have been displayed.

Instance 2: Studying CSV Utilizing “pandas.read_csv()” With Multiprocessing

The next code makes use of the “pd.read_csv()” operate to learn a number of CSV recordsdata in parallel utilizing the multiprocessing library in Python:

import pandas

import multiprocessing

if __name__ == ‘__main__’:

pool = multiprocessing.Pool()

recordsdata = [‘example.csv’, ‘example1.csv’, ‘example2.csv’]

dataframes = pool.map(pandas.read_csv, recordsdata)

for df in dataframes:

print(df)

Based on the above code:

-

- The modules named “pandas” and “multiprocessing” modules are imported.

- The “__name__” and “__main__” attributes are used with the “if” situation to make sure that the code inside it executes immediately from the script slightly than being imported.

- Contained in the situation, the “multiprocessing.Pool()” is used to create a multiprocessing pool object utilizing the default variety of processes accessible on the system.

- The listing of filenames for the CSV recordsdata to be learn is initialized and saved in a variable named “recordsdata”.

- The “pool.map()” technique is used to use the “pd.read_csv” operate to every file in parallel. Which means that every file is learn concurrently by a separate course of, which may pace up the general processing time.

- Lastly, the “for” loop is used to iterate by means of every knowledge body.

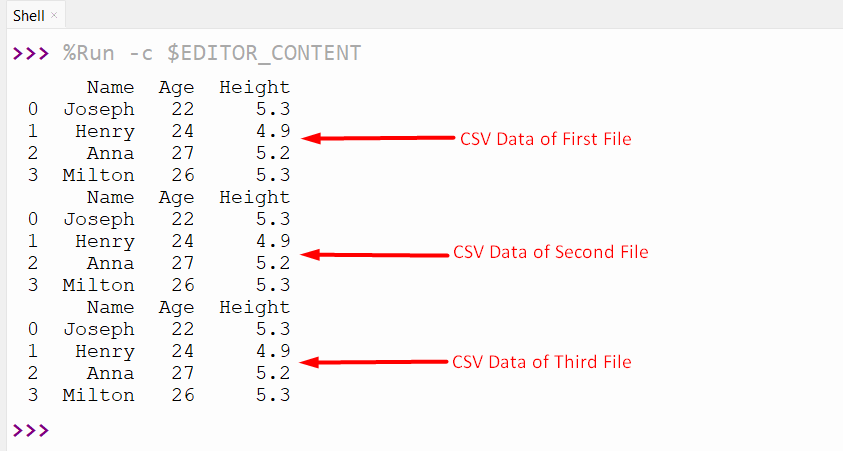

Output

On this end result, the “pd.read_csv()” operate is used with multiprocessing to learn CSV recordsdata.

Conclusion

To enhance the info loading pace, together with its advantages and limitations the “pd.read_csv()” operate is used with the multiprocessing module. The multiprocessing mannequin affords a strategy to pace up knowledge loading by using a number of CPU cores to load the info in parallel. This Python tutorial offered an in-depth information on Python read_csv multiprocessing.