When Apple launched the A11 Bionic chip in 2017, it launched us to a brand new kind of processor, the Neural Engine. The Cupertino-based tech big promised this new chip would energy the algorithms that acknowledge your face to unlock the iPhone, switch your facial expressions onto animated emoji and extra.

Since then, the Neural Engine has develop into much more succesful, highly effective and sooner. Even so, many individuals surprise what exactly the Neural Engine is and what it does. Let’s dive in and see what this chip devoted to AI and machine studying can do.

Apple Neural Engine Kicked Off the Way forward for AI and Extra

Apple’s Neural Engine is Cupertino’s identify for its personal neural processing unit (NPU). Such processors are also referred to as Deep Studying Processors, and deal with the algorithms behind AI, augmented actuality and machine studying (ML).

The Neural Engine permits Apple to dump sure duties that the central processing unit (CPU) or graphics processing unit (GPU) used deal with.

You see, an NPU can (and is designed to) deal with particular duties a lot sooner and extra effectively than extra generalized processors can.

Within the early days of AI and machine studying, nearly all the processing fell to the CPU. Later, engineers tasked the GPU to assist. These chips aren’t designed with the precise wants of AI or ML algorithms in thoughts, although.

Enter the NPU

That’s why engineers got here up with NPUs equivalent to Apple’s Neural Engine. Engineers design these customized chips particularly to speed up AI and ML duties. Whereas producers design a GPU to speed up graphics duties, the Neural Engine boosts neural community operations.

Apple, after all, isn’t the one tech firm to design neural processing items. Google has its TPU, or Tensor Processing Unit. Samsung has designed its personal model of the NPU, as have Intel, Nvidia, Amazon and extra.

To place it into perspective, an NPU can speed up ML computational duties by as a lot as 10,000 instances the pace of a GPU. In addition they devour much less energy within the course of, which means they’re each extra highly effective and extra environment friendly than a GPU for a similar job.

Duties the Apple Neural Engine Takes Duty For

It’s time to dive into simply what kind of jobs the Neural Engine takes care of. As beforehand talked about, each time you utilize Face ID to unlock your iPhone or iPad, your system makes use of the Neural Engine. While you ship an animated Memoji message, the Neural Engine is deciphering your facial expressions.

That’s just the start, although. Cupertino additionally employs its Neural Engine to assist Siri higher perceive your voice. Within the Photographs app, whenever you seek for pictures of a canine, your iPhone does so with ML (therefore the Neural Engine.)

Initially, the Neural Engine was off-limits to third-party builders. It couldn’t be used exterior of Apple’s personal software program. In 2018, although, Cupertino launched the CoreML API to builders in iOS 11. That’s when issues obtained fascinating.

The CoreML API allowed builders to start out benefiting from the Neural Engine. In the present day, builders can use CoreML to research video or classify pictures and sounds. It’s even in a position to analyze and classify objects, actions and drawings.

Historical past of Apple’s Neural Engine

Because it first introduced the Neural Engine in 2017, Apple has steadily (and exponentially) made the chip extra environment friendly and highly effective. The primary iteration had two neural cores and will course of as much as 600 billion operations per second.

The most recent M2 Professional and M2 Max SoCs take that functionality a lot, a lot farther. The Neural Engine in these SoCs have 16 neural cores. They’ll grind by means of as much as 15.8 trillion operations each second.

| SoC | Launched | Course of | Neural Cores | Peak Ops/Sec. |

|---|---|---|---|---|

| Apple A11 | Sept. 2017 | 10nm | 2 | 600 billion |

| Apple A12 | Sept. 2018 | 7nm | 8 | 5 trillion |

| Apple A13 | Sept. 2019 | 7nm | 8 | 6 trillion |

| Apple A14 | Oct. 2020 | 5nm | 16 | 11 trillion |

| Apple M1 | Nov. 2020 | 5nm | 16 | 11 trillion |

| Apple A15 | Sept. 2021 | 5nm | 16 | 15.8 trillion |

| Apple M1 Professional/Max | Oct. 2021 | 5nm | 16 | 11 trillion |

| Apple M1 Extremely | March 2022 | 5nm | 32 | 22 trillion |

| Apple M2 | June 2022 | 5nm | 16 | 15.8 trillion |

| Apple A16 | Sept. 2022 | 4nm | 16 | 17 trillion |

| Apple M2 Professional/Max | Jan. 2023 | 5nm | 16 | 15.8 trillion |

Apple has actually molded the iPhone, and now the Mac, expertise round its Neural Engine. When your iPhone reads the textual content in your photographs, it’s utilizing the Neural Engine. As Siri figures out you nearly all the time run a selected app at a sure time of the day, that’s the Neural Engine at work.

Since first introducing the Neural Engine, Apple has even embedded its NPU in a process as seemingly mundane as images. When Apple refers back to the picture processor making a sure variety of calculations or choices per photograph, the Neural Engine is at play.

The Basis of Apple’s Latest Improvements

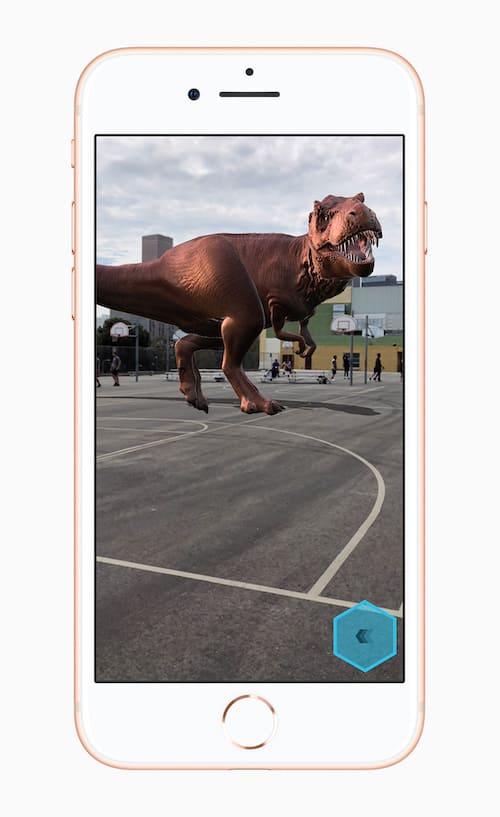

The Neural Engine additionally helps with all the pieces Apple does in AR. Whether or not it’s permitting the Measure app to provide the dimensions of an object or projecting a 3D mannequin into your atmosphere by means of the digicam’s viewfinder, that’s ML. If it’s ML, chances are high the Apple Neural Engine boosts it tremendously.

This implies, after all, that Apple’s future improvements hinge on the Neural Engine. When Cupertino lastly unleashes its blended actuality headset on the world, the Neural Engine can be a giant a part of that expertise.

Even additional, Apple Glass, the rumored augmented actuality glasses anticipated to observe the blended actuality headset, will depend upon Machine Studying and the Neural Engine.

So, from the straightforward act of recognizing your face to unlock your iPhone to serving to AR transport you to a distinct atmosphere, the Neural Engine is at play.